The QSFP+ optical transceiver is the dominant transceiver form factor used for 40 Gigabit Ethernet applications. In the year of 2010, the IEEE standard 802.3ba released several 40-Gbps based solutions, including a 40GBASE-SR4 parallel optics solution for multimode fiber. Since then, several other 40G interfaces have been released, including 40GBASE-CSR4, which is similar to 40GBASE-SR4 but extends the distance capabilities.

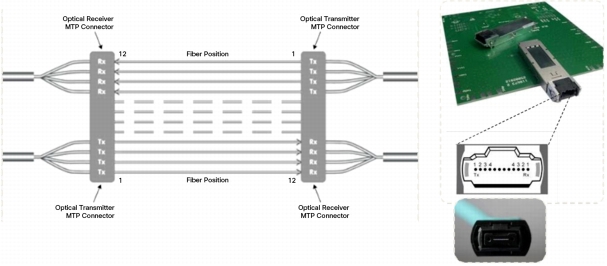

As is know to all, two switches are connected by either transceiver modules or cables. For example, if you simply wanted to cable up two Nexus 3000s with 40GbE, the options are multi mode fiber or twinax copper. We’ll only cover the fiber throughout this post as that is where most of the questions are in recent years. So, since you are now using fiber, how do we connect these switches into the network? First, you need to insert the QSFP+ optic similar to how you would insert a fiber optic for standard 1G or 10G connectivity. For the Nexus 3000, only multi mode fiber is available, so the Cisco part number needed is QSFP-40G-CSR4. This is the equivalent of the GLC-LH-SMD or SFP-10G-SR, for 1G and 10G, respectively. The connector type for QSFP-40G-CSR4 is no longer LC, but is a MPO (multi-fiber push-on) connector.

It is worth noticing that cables for 40G Ethernet actually have 12 fiber strands internal to them to achieve 40GbE. Distance limitations are 100m using OM3 and 150m using OM4 fiber respectively. Because these cables are connected with MPO connectors, have 12 strands, and are ribbon cables for native 40GbE. They will not be able to leverage any of your existing fiber optic cable plant. So be prepared to home run these cable where needed throughout the data center.

However, you may not always need native 40GbE between two switches. Instead, you may opt to configure multiple 10GbE interfaces instead. In this case, the QSFP-40G-SR4 is still needed, but the cable selection is different with what was previously shown above and the ability to use current cable plants is possible. The cable required here would have an MPO connector on one end that would connect into the QSFP port and then “break out” into 4 individual fiber links on the other end. These breakout cables terminate with LC male connectors. I would like to call it MTP-LC harness cable. The application for this MTP-LC harness cable is to directly connect a QSFP+ port to (4) SFP+ ports. For most Data Center applications, the use of structured cabling is employed via MTP trunks and the use of patch panels.

This is great that they terminate with LC male connectors because this allows customers to leverage the current cable infrastructure assuming existing patch panels have LC interfaces and OM3/OM4 fiber is used throughout the data center. These breakout cables are also nice if you want to attach a northbound switch that only supports 10GbE interfaces. You can easily direct connect or jump through a panel in the data center to connect the Nexus 3000 via multiple 10GbE interfaces to a Nexus 7000 (or any other switch with 10GbE-only interfaces).

Accordingly, direct attach cables which are terminated with QSFP+ connector is an alternative in 40G connectivity. For instance, HP JG331A compatible QSFP+ to 4SFP+ direct attach copper cable is terminated with one QSFP+ connector on one end and four SFP+ connector on the other end.

40G QSFP+ cables can provide inexpensive and reliable 40G speed connections using either copper cables with distances reaching up to 30ft (10 meters length) or active optical cables reaching even 300ft (100 meters). Cost of local NOC connectivity is significantly reduced by avoiding the more costly fiber transceivers and optical cables.

No comments:

Post a Comment